This is one of article which I had written for GitHub , do check and leave your feedback

Welcome to The World of Deep Reinforcement Learning- Powering Self Evolving System. It can solve the most challenging AI problems. Today, the state of the art results are obtained by an AI that is based on Deep Reinforcement Learning.

In recent years, the world has witnessed an acceleration of innovations in Deep Reinforcement learning (DeepRL).Such as - Google’s AutoML is also powered by DeepRL. | Beating the champion of the game Go with AlphaGo in Y'2016 | OpenAI and the PPO (Proximal Policy Optimization) in Y'2017 | The resurgence of curiosity-driven learning agents in Y'2018 with UberAI GoExplore and OpenAI RND | Recently, OpenAI Five that beats the best Dota players in the world Y'2016-Y'2019.

As more and more companies leverage DeepRL and discover new and powerful applications, they’re calling on data scientist & AI Engineers like us to create the next wave of disruptive application/solution offerings.

Combining the powerful principles of both deep learning and reinforcement learning, DeepRL is changing the face of many industries, including: Medicine: to determine optimal treatments for health conditions and drug therapies.| Robotics: to improve robotic manipulation with minimal human supervision. | Industrial Automation: to integrate intelligence into complex and dynamic systems. | Finance: increase profitability in trading. |

Let us take advantage of the global AI shift! Let us unleash the power of DRL!

This Repo primarily includes

Agent

Environment

Action

State

Reward

Policy

Value function

Function approximator

Markov decision process (MDP)

Dynamic programming (DP)

Monte Carlo methods

Temporal Difference (TD) algorithms

Model

-Value Optimization Agents Algorithms

-Deep Q Network (DQN)

-Double Deep Q Network (DDQN)

-Mixed Monte Carlo (MMC)

-Policy Optimization Agents Algorithms

-Policy Gradients (PG)

-Asynchronous Advantage Actor-Critic (A3C)

-Deep Deterministic Policy Gradients (DDPG)

-Proximal Policy Optimization (PPO)

-General Agents Algorithms

-Direct Future Prediction (DFP)

- Imitation Learning Agents Algorithms

-Behavioral Cloning (BC)

-Conditional Imitation Learning

- Hierarchical Reinforcement Learning Agents Algorithms

-Hierarchical Actor Critic (HAC)

- Memory Types Algorithms

-Hindsight Experience Replay (HER)

-Prioritized Experience Replay (PER)

Keras-RL (Developed by Matthias Plappert- Employed with OpenAI)

OpenAI Gym

Facebook Horizon

Google Dopamine

Google DeepMind TensorFlow Reinforcement Learning (TRFL)

Coach (Developed by Intel Nervana Systems)

RLLib (Highly customizable open source DeepRL framework with support for TF2.0/PyTorch 1.4, customization for Environments/Policy/Action)

Tensorforce (Tensorforce is built on top of Google's TensorFlow framework version 2.0 by Alexander Kuhnle - currently with BluePrism)

Deep Q Learning (DQN)

Double DQN

Deep Deterministic Policy Gradient (DDPG)

Continuous DQN (CDQN or NAF)

Cross-Entropy Method (CEM)

Dueling network DQN (Dueling DQN)

Deep SARSA

Asynchronous Advantage Actor-Critic (A3C)

Proximal Policy Optimization Algorithms (PPO)

Also a reference to series of blog posts and videos 🆕 about Deep Reinforcement Learning.

Introduction to Industry Implementation of Deep RL

Deep RL is one of the key factor powering -

- Medicine: to determine optimal treatments for health conditions and drug therapies.

- Robotics: to improve robotic manipulation with minimal human supervision.

- Finance: increase profitability in trading.

- Industrial Automation: to integrate intelligence into complex and dynamic systems.

- Self Evolving Control System

- Hyperautomation

- Google AutoML (NAS + Deep RL)

- TRAX (AI with Speed in Sequence Model )

- Autocorrection, autocompletion in Email/Typing/NLP (heady mix of dynamic programming, hidden Markov models, and word embeddings)

- Autonomous Systems

- Smart Deep Gaming

- Many more

Introduction to DeepRL

Overview

The main solution blocks of RL/DeepRL are

- the agent and

the environment.

- The environment is the world that the agent lives in and interacts with.

- At every step of interaction, the agent sees a (possibly partial) observation of the state of the world, and then decides on an action to take.

- The environment changes when the agent acts on it, but may also change on its own.

- The agent also perceives a reward signal from the environment, a number that tells it how good or bad the current world state is.

- The goal of the agent is to maximize its cumulative reward, called return. Reinforcement learning methods are ways that the agent can learn behaviors to achieve its goal.

High Level Solution Constructs of DeepRL

- High Level Solution Constructs of Deep Reinforcement Learning through Infographics

img src="github.com/DeepHiveMind/gateway_to_DeepRein.." alt="Deep Reinforcement Learning High Level Solution Constructs" width = "900" height ="400" />

img src="github.com/DeepHiveMind/gateway_to_DeepRein.." alt="Deep Reinforcement Learning High Level Solution Constructs" width = "900" height ="400" />

- Agent — the learner and the decision maker.

- Environment — where the agent learns and decides what actions to perform.

- Action — a set of actions which the agent can perform.

- State — the state of the agent in the environment.

- Reward — for each action selected by the agent the environment provides a reward. Usually a scalar value.

- Policy — the decision-making function (control strategy) of the agent, which represents a mapping from situations to actions.

- Value function — mapping from states to real numbers, where the value of a state represents the long-term reward achieved starting from that state, and executing a particular policy.

- Function approximator — refers to the problem of inducing a function from training examples. Standard approximators include decision trees, neural networks, and nearest-neighbor methods

- Markov decision process (MDP) — A probabilistic model of a sequential decision problem, where states can be perceived exactly, and the current state and action selected determine a probability distribution on future states. Essentially, the outcome of applying an action to a state depends only on the current action and state (and not on preceding actions or states).

- Dynamic programming (DP) — is a class of solution methods for solving sequential decision problems with a compositional cost structure. Richard Bellman was one of the principal founders of this approach.

- Monte Carlo methods — A class of methods for learning of value functions, which estimates the value of a state by running many trials starting at that state, then averages the total rewards received on those trials.

- Temporal Difference (TD) algorithms — A class of learning methods, based on the idea of comparing temporally successive predictions. Possibly the single most fundamental idea in all of reinforcement learning.

- Model — The agent’s view of the environment, which maps state-action pairs to probability distributions over states. Note that not every reinforcement learning agent uses a model of its environment

Please refer to the 📜:ARTICLE for further detailing into the basic constructs of DeepRL ecosystem.

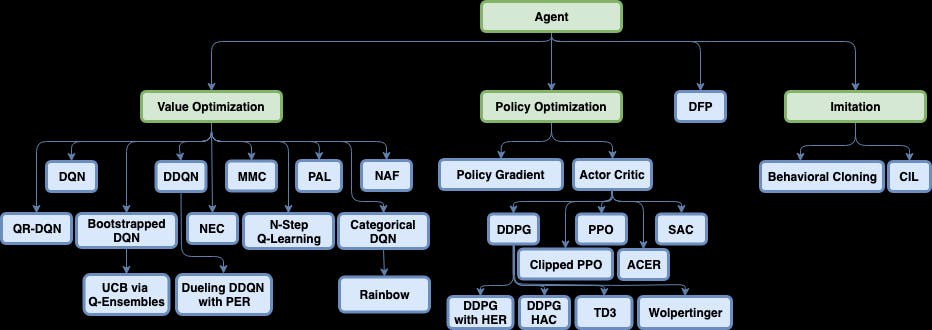

Significant Deep RL Models

Deep RL Algorithms overview by Infographics *useful taxonomy of algorithms in modern RL

Value Optimization Agents Algorithms

- Deep Q Network (DQN)

- Double Deep Q Network (DDQN)

- Dueling Q Network

- Mixed Monte Carlo (MMC)

- Persistent Advantage Learning (PAL)

- Categorical Deep Q Network (C51)

Policy Optimization Agents Algorithms

- Policy Gradients (PG) | Multi Worker Single Node

- Asynchronous Advantage Actor-Critic (A3C) | Multi Worker Single Node

- Deep Deterministic Policy Gradients (DDPG) | Multi Worker Single Node

- Proximal Policy Optimization (PPO)

General Agents Algorithms

- Direct Future Prediction (DFP) | Multi Worker Single Node

Advanced DeepRL Models

Imitation Learning Agents Algorithms

- Behavioral Cloning (BC)

- Conditional Imitation Learning

Hierarchical Reinforcement Learning Agents Algorithms

Memory Types Algorithms

Exploration Techniques Algorithms

- E-Greedy

- Boltzmann

- Normal Noise

- Truncated Normal Noise

- Bootstrapped Deep Q Network

Comparison of most common DeepRL frameworks

- Here are the list of Most commonly used DeepRL framework

Keras-RL (Developed by Matthias Plappert- Employed with OpenAI)

OpenAI Gym

Facebook Horizon

Google Dopamine

Google DeepMind TensorFlow Reinforcement Learning (TRFL)

Coach (Developed by Intel Nervana Systems)

RLLib (Highly customizable open source DeepRL framework with support for TF2.0/PyTorch 1.4, customization for Environments/Policy/Action)

Tensorforce (Tensorforce is built on top of Google's TensorFlow framework version 2.0 by Alexander Kuhnle - currently with BluePrism)

- A few other DeepRL Frameworks (not so popular)

MAgent

SLM-Lab

DeeR

Garage

Surreal

RLgraph

Simple RL

- Comparison of Most commonly used Deep RL frameworks

Refer to the 📜 ARTICLE for the comparison-of-Reinforcement-learning-frameworks, such as Dopamine / rllib / Keras-rl / coach / trfl / tensorforce / coach

Introduction to DeepRL Models supported by Keras RL framework

Refer to the Repo for how to implement important following Agent Models using KERAS-RL, such as

Deep Q Learning (DQN)

Double DQN

Deep Deterministic Policy Gradient (DDPG)

Continuous DQN (CDQN or NAF)

Cross-Entropy Method (CEM)

Dueling network DQN (Dueling DQN)

Deep SARSA

Asynchronous Advantage Actor-Critic (A3C)

Proximal Policy Optimization Algorithms (PPO)

DeepRL HANDS ON with Keras RL GYM

- keras-rl implements some state-of-the art deep reinforcement learning algorithms in Python and seamlessly integrates with the deep learning library Keras.

- Furthermore, keras-rl works with OpenAI Gym out of the box. This means that evaluating and playing around with different algorithms is easy.

- keras-rl can be extended according to our own custom needs. One can use built-in Keras callbacks and metrics or define one's own.

Please refer to https://github.com/Deep-Mind-Hive/keras-rl/tree/master/examples repo for DeepRL Agents with following algorithmic implementations -

- CEM_cartpole.py

- DDPG_mujoco.py

- DDPG_pendulum.py

- DQN_atari.py

- DQN_cartpole.py

- DUEL_DQN_cartpole.py

- NAF_pendulum.py

- SARSA_cartpole.py

Please refer to repo DeepRL HANDS-ON with Keras & TF & OpenAI-Gym for following DeepRL model implementations:

-Actor Critic Method

-Deep Deterministic Policy Gradient (DDPG)

-Deep Q-Learning for Atari Breakout

Interesting DeepRL framework RLlib

Refer to RLib frameowrk for its USPs (Customization feature).

RLlib provides ways to customize almost all aspects of

- training,

- including the environment,

- neural network model,

- action distribution, and

- policy definitions

The following infographics shows the customization flexibility offered by RLlib -

The above diagram also provides a conceptual overview of data flow between different components in RLlib -

- We start with an *Environment*, which given an *action* produces an observation. -->

- Then, The observation is preprocessed by a *Preprocessor* and *Filter* (e.g. for running mean normalization) before being sent to a *neural network Model* (agent Model with Policy). -->

- The model output is in turn interpreted by an *ActionDistribution* to determine the next action.

RLlib supports both the major DeepNN frameworks for neural network Model (agent Model with Policy) -

- TensorFlow 2.0

- PyTorch 1.4.0

DeepRL HANDS ON additional

Q-learning with FrozenLake

Deep Q-learning with Doom

-📜 ARTICLE // DOOM IMPLEMENTATION

-📹 Create a DQN Agent that learns to play Atari Space Invaders 👾

Policy Gradients with Doom

Improvements in Deep Q-Learning

-📜 ARTICLE// Doom Deadly corridor IMPLEMENTATION.ipynb)

Advantage Advantage Actor Critic (A2C)

Proximal Policy Gradients

Curiosity Driven Learning made easy

- 📜 ARTICLE